Hello all,

I have been reading as much as I can and still cannot find out what I'm doing wrong, so hopefully you folks can help me out a bit.

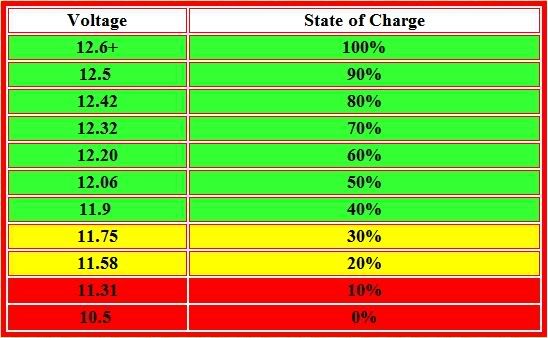

I have a homebuilt 18v, 3.5A PV panel that is using a switching charge controller to charge a 12v 35AH SLA battery. I then hook this battery to a 400w inverter to power (usually) a hydroponic garden that is 120v, 0.8A, AC of course. I have been keeping track of the load voltage of the battery as it discharged to get a better idea of the curve. Upon initial hook up to the device I see about 12.88vdc. I then measure battery voltage across the terminals every few minutes to see how the voltage is dropping. When I get to 10.9vdc, I disconnect to save the battery.

The problem: At 0.8A the battery drops from 12.8 to 10.9vdc in only 6-7 hours. My math says that if 12.88vdc is fully charged, and 10.8 is about 40% charged, I should have about 21AH to play with (60% of 35AH). I measure the current draw from the battery to the inverter and from the inverter to the device and the efficiency loss looks like 20% (which is to spec, I believe).

So, at 0.8A, 12AC I should be pulling 96-100watts. 100w/12vdc = 8.33A. Which as I type this the proverbial lightbulb has just gone off. Heh. In the interest of not wasting all this typing I did, does this all sound correct and within specification? Is my battery fully charging? Can I really only expect to get 250-300 watts from a 12v 35AH battery?

Thank you for suffering through my first post and potentially stupid question.

I have been reading as much as I can and still cannot find out what I'm doing wrong, so hopefully you folks can help me out a bit.

I have a homebuilt 18v, 3.5A PV panel that is using a switching charge controller to charge a 12v 35AH SLA battery. I then hook this battery to a 400w inverter to power (usually) a hydroponic garden that is 120v, 0.8A, AC of course. I have been keeping track of the load voltage of the battery as it discharged to get a better idea of the curve. Upon initial hook up to the device I see about 12.88vdc. I then measure battery voltage across the terminals every few minutes to see how the voltage is dropping. When I get to 10.9vdc, I disconnect to save the battery.

The problem: At 0.8A the battery drops from 12.8 to 10.9vdc in only 6-7 hours. My math says that if 12.88vdc is fully charged, and 10.8 is about 40% charged, I should have about 21AH to play with (60% of 35AH). I measure the current draw from the battery to the inverter and from the inverter to the device and the efficiency loss looks like 20% (which is to spec, I believe).

So, at 0.8A, 12AC I should be pulling 96-100watts. 100w/12vdc = 8.33A. Which as I type this the proverbial lightbulb has just gone off. Heh. In the interest of not wasting all this typing I did, does this all sound correct and within specification? Is my battery fully charging? Can I really only expect to get 250-300 watts from a 12v 35AH battery?

Thank you for suffering through my first post and potentially stupid question.

Comment